Informational entropy measures the unpredictability or uncertainty of a data source, quantifying the average amount of information (or “surprise”) produced.

Shannon entropy quantifies the randomness of a probability distribution and is defined as follows:

H(X)=−∑p(x_i)log2p(x_i)

p(x_i) = the probability of each outcome x_i

In the context of Heat Mapping Entropy:

- Each temperature sensor reading is a random variable whose value changes over time. Large spikes in temperature carry high information, while small changes carry low information.

- In the sensor mapping code, there is a temperature score calculation that prioritizes spikes in temperature, allowing the most informative sensors to grab the hotspot.

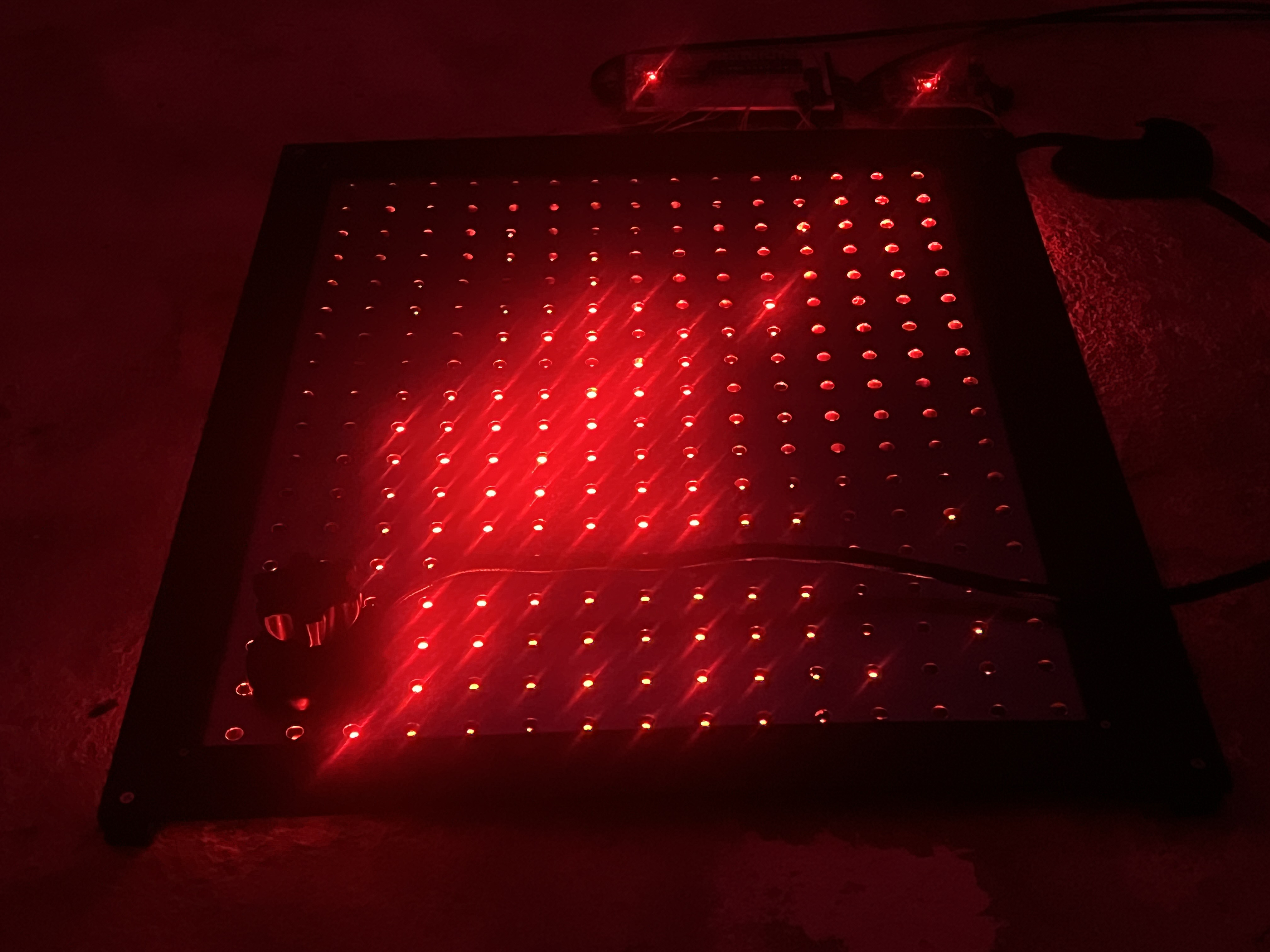

- The LED matrix functions as a noisy channel, with fading, arcs, and overlapping heat patterns introducing controlled “noise.” These dynamics act like error correction, preserving the essential information while smoothing out minor fluctuations.

Heat Mapping Entropy demonstrates Shannon’s principle in the following characteristics: even in noisy environments, meaningful information can be reliably encoded, transmitted, and decoded, with entropy measuring both the uncertainty of the source and the effective capacity of the channel to convey information visually.